Using stepci to create automated API tests in yaml

Sick of writing tests in javascript? Want to use the same language as your CI and infra configs? Well now you can, using stepci.

Here's a snippet from the official website and what makes stepci different:

Step CI is an open-source API Quality Assurance framework

- Language-agnostic.

- Configure easily using YAML, JSON or JavaScript

- REST, GraphQL, gRPC, tRPC, SOAP. Test different API types in one workflow

- Self-hosted. Test services on your network, locally or with CI/CD

- Integrated. Play nicely with others

Sounds pretty cool. And different, which is always exciting with test automation tools.

Today I'll be creating some tests, exploring features and checking out what else stepci has to offer.

Getting started

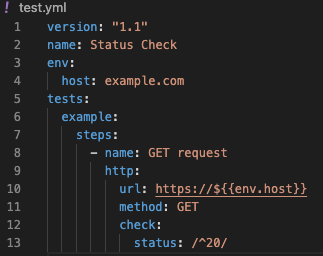

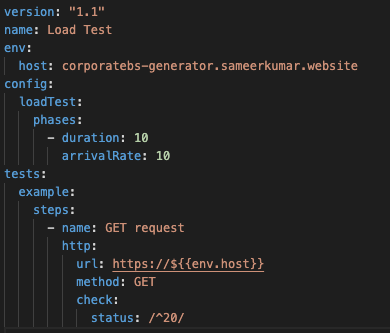

Below is the very first .yml test I created, following on from the getting started guide. It's pretty straight forward, with clear keywords such as env, tests and steps.

To learn about the different keywords and what they do, jump here.

Syntax guide

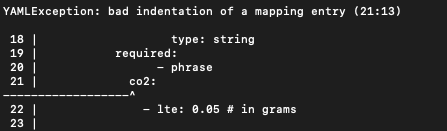

If you've created workflows for GitHub Actions or CirceCI before, you'll be comfortable with stepci yaml syntax. That same syntax is used in writing stepci tests. This also means that indenting and command order is vital for success.

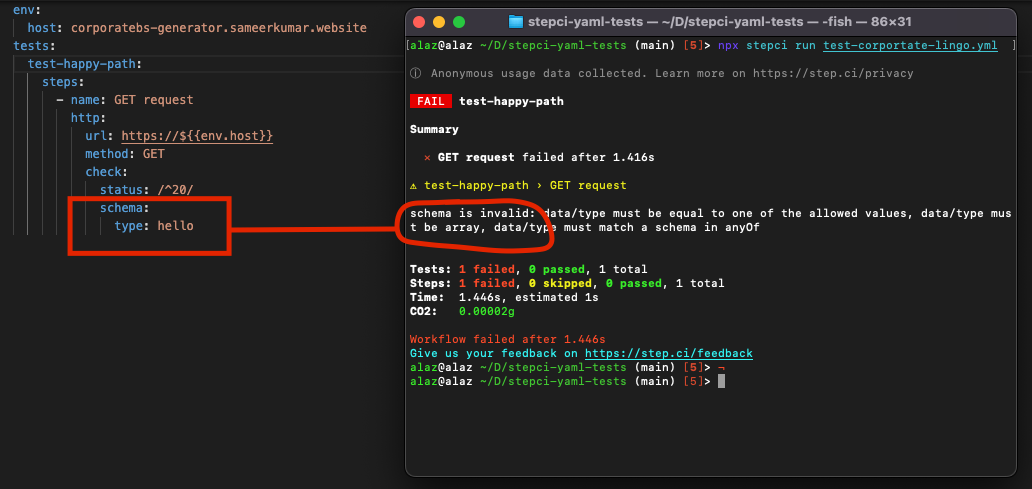

For those pesky indentation errors, stepci provides its own validations. Below is a yaml exception for an indentation error.

Test output

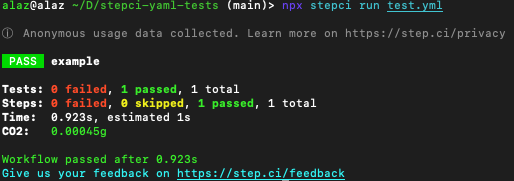

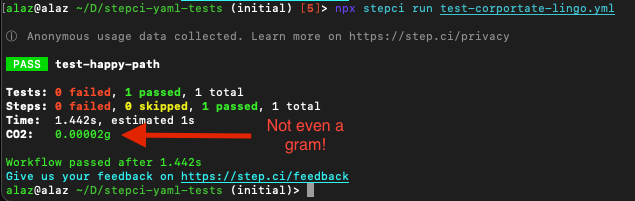

Below is a snapshot of what a passing test looks like.

It's clean and to the point, although I personally love to see my assertions included in the logs, alongside their respective result. Test checks only appear here if there is a test failure encountered.

Additionally, there doesn't seem to be any ability to export results to a junit or json format, leaving the cli logs as the only output delivered.

Tests and assertions

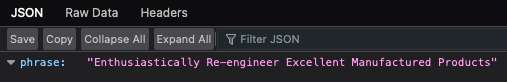

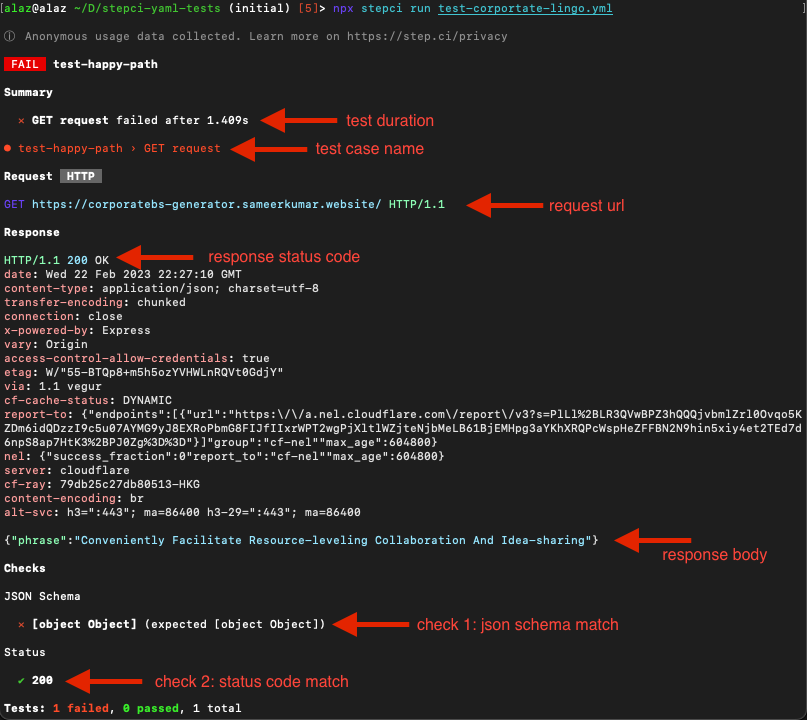

Now I'll use a custom api and run a few different validations available. For this purpose I'll be using this handy corportate lingo API, https://corporatebs-generator.sameerkumar.website/. The api returns 1 random string on each call. Example below:

For this api, I'll look to run the following checks:

- check response for a 200 code

- check the body schema is type object

- check the body contains

phraseproperty

In the below example, the test is checking the response body of the above api to be type hello when infact it is type object.

I was unsure how to display a more verbose test output unfortunately.

However, using adding an additional check which looks for a required response property I was able to get logs that proved much more helpful.

Looking at the command guide, I don't see how I can print all this helpful logs by default which is a shame. Viewing responses and assertions are essentials in writing tests. Perhaps it is possible using cli options but for now, I could only see the full logs whenever an error occurred.

Other cool features

That's all for the basics. Now let's look at a few 'cool' features.

Check your API carbon footprint

This was an unexpected feature and not something one would typically consider when running tests. But now you can!

When you're adding more tests, checks and overall code to your test suite, the carbon footprint figure increases accordingly. The ability to check the real-life affect of your tests is something I hadn't even thought of. Better start refactoring!

You can read more about how digital emissions are calculated in this article by Sustainable Web Design.

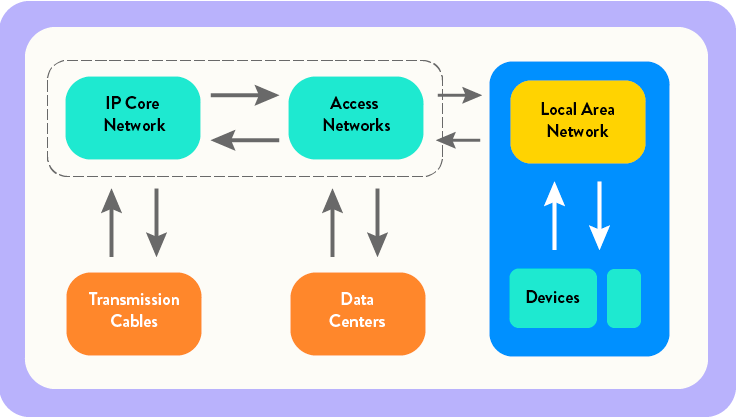

Testing APIs

The following api properties and commands are supported within tests:

- auth - basic, bearer, oauth, certificate

- query params

- body - json, plain

- and much more

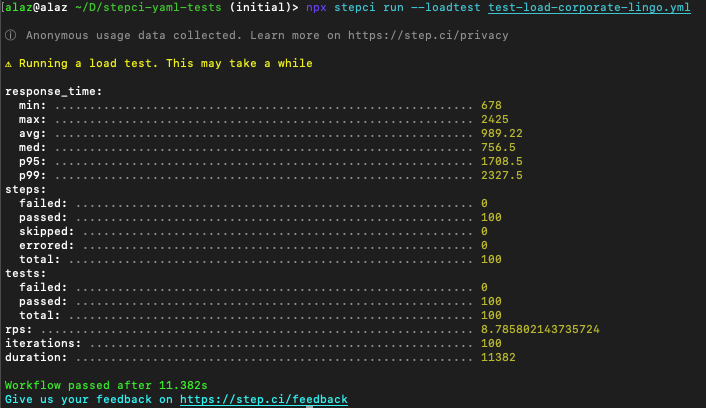

Load testing

Now if you've used Artillery before for load or performance testing, you'll fit right into stepci. To kick off a load tests, remember to add --loadtest and away it goes.

--loadtest and spent quite a while stressing why I couldn't run my load test ... Oops!Although interestingly, for these load tests that run 10s, 100s or even 1000s of virtual users, I don't see any carbon footprint value calculated here.

Now I'm concered about all the load tests I've ever ran and how much destruction I've caused 😬.

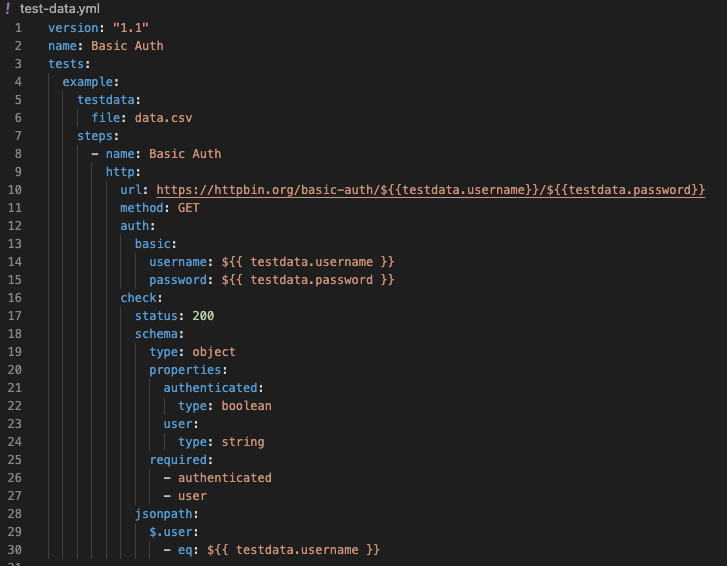

Test data

A staple of many test frameworks, this feature comes in handy when looping through massive data sets to validate functionality across multiple variations.

# test data csv

username,password

username1,password1

username2,password2Using an endpoint which requires a username & password to login, I can loop through data sets, validating each scenario for:

- a 200 status code

- a matching json schema

- a json key matching test data

The one downside I found here is that the loop stops immediately when an error has occurred, preventing any further executions. On the one hand this prevents more failures and allows you to resolve the issue right away. On the other, you could end up playing whack-a-mole and fixing issues one by one.

Perhaps instead a boolean option to 'continue/stop on error' is needed here.

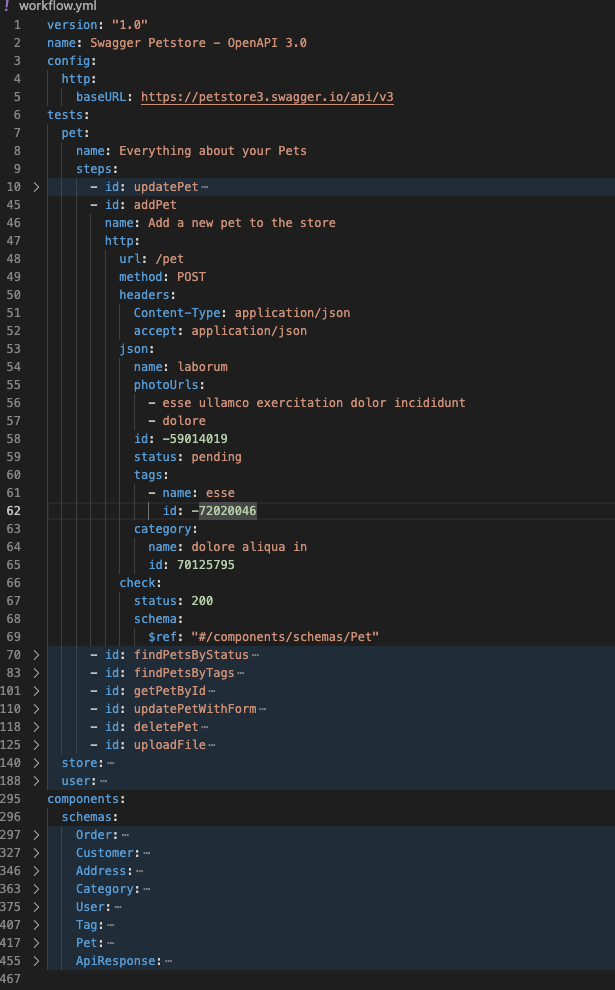

Generate tests from OpenAPI spec

Now this can be a massive time saver, depending on how 'complete' the spec is.

Using stepci, you can generate api tests directly from a swagger api definition. This is amazing to hear, as previously I've manually created postman tests based off swagger specs handed over to me.

Using the example provided in stepci docs, I can auto-generate tests directly from an api doc. Here's what it looks like:

Now, by utilising this built-in feature, you can cut down massively on manually creating these tests and speed up automation efforts.

That being said, if your api spec is updated by a BE dev, your test would quickly become out of date. To resolve this scenario, this form of test generation might be best suited to a CI job which hooks onto any api definition changes to auto-generate tests from the latest api spec.

Final thoughts

stepci opens the door for a new approach to test automation, by using user-frienly yaml and clear commands, making tests easy on the eye yet also contain a mixture of technical features. It can comfortably achieve API automation and can integrate into many CI/CD systems, providing seamless compatibility thanks to its yaml foundations.

Below I've listed my summarised likes and dislikes I captured during my brief testing of this tool. These may very well be covered by current or future development so I'll make sure to keep an eye out.

Likes 👍

- tons of in-built features that support modern testing

- api-spec-to-test generation allows tests to directly match api docs

- clear yaml syntax makes tests easier to read and reduces typical code complexity

- tests are lightweight

- delivers exactly as the tool promises

Dislikes 👎

- unable to print api request/response pairs during test execution

- lacking test configuration options (e.g retry threshold, continue/stop on error)

- unable to print/output test variable properties during debugging

- no junit/json test reports

At the end of the day, stepci is a lightweight, user-friendly and clever API automation framework that stands out from its GUI (postman) and similar (artillery) counterparts.

I look forward to keeping up with its roadmap and where it's headed. 🔮